The Goldilocks Approach: Hierarchical Classification with AI_QUERY in Databricks

Leverage Databricks `AI_QUERY` to tackle complex, context-dependent hierarchical classification problems that traditional ML and simple LLM prompts cannot solve.

Classification Machine Learning problems have been around for a long time. Traditional approaches like decision trees and SVMs have dominated the landscape, and with the rise of NLP, we gained powerful tools for text classification using techniques like TF-IDF and word embeddings. Now, we have Large Language Models that understand context and nuance in ways that traditional models often can’t match. This opens up opportunities to automate classification processes, especially in complex scenarios where rigid classification rules break down, such as hierarchical classification.

How can you take advantage of the latest frontier in classification? This blog will show you how to use AI_QUERY in Databricks to run batch inference for complex hierarchical classifications with LLMs.

As always, choose the best approach for your problem! There are many occasions when a classical approach is a better choice. However, if:

Your historical data is not trustworthy (e.g. it may be inconsistent, difficult to validate, or labeled by multiple people with different interpretations; you can’t trust that the patterns held therein are going to be useful in a classical machine learning setup)

Your categories are flexible (e.g. you need to be able to add a new category on the whims of your business partners without retraining an entire model)

You’re dealing with nuanced, context-dependent classifications (e.g. rigid rules fail; maybe “billing dispute” vs “billing inquiry” depends on subtle linguistic cues that are difficult to encode as features)

… then read on to learn how to use AI_QUERY for hierarchical classification!

The Goal

Let’s say that we’re a telecommunications company and we have a lot of customer call transcripts coming in that we want to classify along four levels: Domain (Level 1) → Category (Level 2) → Problem Type (Level 3) → Root Cause (Level 4). This hierarchy creates a comprehensive support taxonomy covering everything from network outages and billing disputes to device issues and order management, so our business team can gain a lot of insights once all of these transcripts are classified correctly. The only obstacle is actually classifying these transcripts. How should we do that?

If you’re already familiar with the power of AI_QUERY, which allows you to query LLM endpoints via SQL for batch workloads, you could jump right into the Databricks SQL editor or a notebook and use the SQL function right away, relying on a monster prompt that attempts to take each record and assign the right hierarchy in one shot.

The Problem with the “Everything at Once” Approach

Unfortunately, if we have 12 Domains, and each Domain contains 5-6 Categories, and each Category has 3+ Problem Types, and each Problem Type has 5+ Root Causes, we have at minimum 900 hierarchical paths that a single transcript could take.

Combinatorial explosion! From a statistical perspective, we’re asking an LLM to perform a 900-class classification problem—the kind of task where even specialized neural networks start sweating.

There are a couple of issues with treating each of the 900 options as its own unique value:

More choices = worse outcomes: Imagine a 900x900 confusion matrix; a classification problem of this size will very likely result in poor precision and poor recall.

The “needle in a haystack” problem: When you present an LLM with a massive list of options, accuracy degrades significantly. False Negatives are easy when there are 899 other options, some of which sound very similar.

Prompt complexity becomes unmaintainable (and more expensive): Your prompt becomes a short story, making it difficult to debug, version control, and understand what instructions the model is actually following - and on top of that you’re paying for all those tokens each time you send a request!

What About Individual Level Classification?

On the other hand, if we look at each level individually, we greatly reduce the number of options per record. We only need to predict across 12 Domains, for example, and once we have the Domain, we only need to predict across 5 or 6 Categories, and so on down the levels. This approach will improve shrink the prediction space and likely improve accuracy, precision, and recall. The downside here is that we’re now running four sequential SQL queries. Depending on the model we’re using, this could get expensive and/or slow.

By now you might be thinking, “So that porridge is too cold, and this one is too hot… How do I use AI_QUERY for hierarchical classification???”

The Goldilocks Solution: Hierarchically-Aware Classification

To get just-right porridge, we can find a balance of the two approaches above by running two queries: the first will use a simple prompt to classify Level 1, and then the second will use a dynamic prompt to classify Levels 2-4. Here’s our recipe:

Create a hierarchies table

Build a gold-standard evaluation dataset

Execute your Domain (Level 1) prompt

Execute your Levels 2-4 prompt (with dynamic hierarchy filtering)

Step 1: Build Your Hierarchy Table

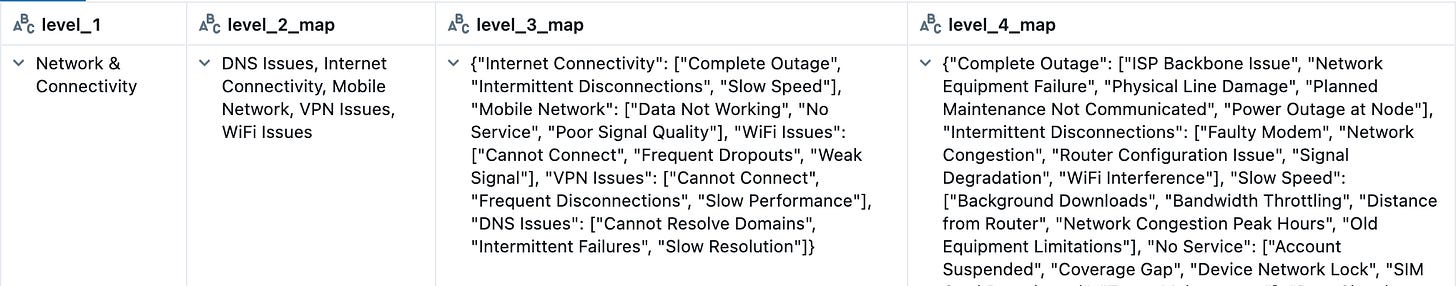

First, we need to build a table of our classification hierarchies. This table will be the reference for our second dynamic AI_QUERY prompt. It should look something like the schema here:

transcript_classification_hierarchy_table

├── level_1 (string) -- Domain

├── level_2_map (string) -- Category

├── level_3_map (string) -- Problem Type

└── level_4_map (string) -- Root CauseEach row represents a valid path through your hierarchy. This is your source of truth that ensures we only provide valid paths to the LLM.

Here’s a sample record:

Step 2: Build Your Evaluation Dataset

Next, we need to make sure we have a solid evaluation dataset. If possible, grab an unsuspecting nearby SME and beg them to help you build this dataset.

The number of examples depends on how many categories you have in your dataset, but aim for at least 50-100 labeled examples covering your major categories. Try to avoid a scenario where you spend a lot of time prompt engineering, only to realize your ground truths are not all that accurate to begin with and you’ve been tuning your prompt in the wrong direction (speaking from personal experience, this is not fun).

Bonus: Having a gold-standard evaluation dataset allows you to continually try new models and model versions as they’re released and use the cheapest, fastest version that passes your evaluation metrics. This is deeply unsexy work, but it is possibly the most valuable.

Step 3: Classify Level 1 (Domain)

Once you have your hierarchical table and evaluation dataset, we’re ready to run our first AI_QUERY SQL statement: classifying Level 1. In this first batch inference round, we focus only on classifying the Domain. Since all the hierarchies stem from this first choice, getting it right is critical—but with only 12 options, our LLM has a much better chance of getting the classifications right. Here’s a sample query, using Llama 3.3 70b. We concatenate the prompt, which consists of the 12 Domain options, as well as the transcript itself and an instruction to only return the category name.

%sql

CREATE OR REPLACE TABLE catalog.schema.call_transcripts_l1_predictions AS

SELECT

call_id,

transcript_text,

AI_QUERY(‘databricks-meta-llama-3-3-70b-instruct’,

CONCAT(

‘Classify the transcript into one of the following categories:

- Network & Connectivity

- Billing & Payments

- Account Management

- Device & Equipment

- Service Provisioning

- Technical Support

- Mobile Services

- Internet Services

- TV & Streaming

- Voice Services

- Security & Privacy

- Sales & Orders\\n\\n’,

‘Transcript: ‘, transcript_text, ‘\\n\\n’,

‘Return only the category name.’

)

)

AS level_1_classification

FROM catalog.schema.call_transcripts_rawSpend time here refining the prompt and testing out a variety of models to get high accuracy at Level 1 before moving on to the next step. In this example, my prompt is very simple: I don’t even bother defining my categories. In your own use cases, adding in descriptions of each category may be beneficial.

Step 4: Classify Levels 2-4

Once we’re happy with the Level 1 accuracy, we create our second AI_QUERY SQL statement. This time, we will write a much more complex prompt that 1) builds upon the L1 classifications and 2) joins with the hierarchical mappings from Step 1. This way, our prompt only includes the valid options for Levels 2 through 4 that correspond to the predicted Level 1 Domain.

%sql

CREATE OR REPLACE TABLE catalog.schema.call_transcripts_all_classifications AS

SELECT

call_id,

transcript_text,

l1.level_1_classification,

AI_QUERY(‘databricks-meta-llama-3-3-70b-instruct’,

CONCAT(

‘Classify the call transcript into Level 2, Level 3, and Level 4 subcategories. Strictly adhere to the hierarchies as listed below.\n\n’,

‘Transcript: ‘, l1.transcript_text, ‘\n’,

‘Level 1: ‘, l1.level_1_classification, ‘\n\n’,

‘Valid Level 2 options: ‘, hier.level_2_map, ‘\n\n’,

‘Valid Level 3 options by Level 2: ‘, hier.level_3_map, ‘\n\n’,

‘Valid Level 4 options by Level 3: ‘, hier.level_4_map, ‘\n\n’,

‘Return ONLY valid JSON (no markdown): {”level_2”: “X”, “level_3”: “Y”, “level_4”: “Z”}\n’

)

) AS classification_json

FROM catalog.schema.call_transcripts_l1_predictions l1

INNER JOIN catalog.schema.transcript_classification_hierarchy_table hier

ON l1.level_1_classification = hier.level_1The query above might feel a bit abstract, so let’s take a look at what the prompt will actually look like for the L1 Domain “Billing & Payments”:

Classify the call transcript into Level 2, Level 3, and Level 4 subcategories. Strictly adhere to the hierarchies as listed below.

Transcript: [transcript not shown for brevity]

Level 1: Billing & Payments

Valid Level 2 options: Auto-Pay, Invoice Issues, Payment Plans, Payment Processing, Refunds

Valid Level 3 options by Level 2: {”Invoice Issues”: [”Cannot Access Invoice”, “Incorrect Charges”, “Missing Credits”], “Payment Processing”: [”Duplicate Payment”, “Payment Declined”, “Payment Not Posted”], “Auto-Pay”: [”Cannot Disable”, “Not Working”, “Setup Failed”], “Payment Plans”: [”Application Denied”, “Early Payoff Request”, “Missed Payment”], “Refunds”: [”Incorrect Amount”, “Not Received”, “Request Denied”]}

Valid Level 4 options by Level 3: {”Incorrect Charges”: [”Double Billing”, “Proration Error”, “Service Not Ordered”, “Tax Calculation Error”, “Wrong Rate Applied”], “Missing Credits”: [”Adjustment Not Posted”, “Manual Credit Not Entered”, “Promotion Not Applied”, “Refund Not Processed”, “System Processing Delay”], “Cannot Access Invoice”: [”Account Access Restricted”, “Email Not Received”, “PDF Generation Error”, “Portal Login Issue”, “System Maintenance”], “Payment Declined”: [”Bank Fraud Detection”, “Card Expired”, “Incorrect Card Information”, “Insufficient Funds”, “Payment Gateway Error”], “Payment Not Posted”: [”Bank Processing Time”, “Manual Entry Required”, “Processing Delay”, “System Synchronization Issue”, “Wrong Account Number Entered”], “Duplicate Payment”: [”Auto-Pay and Manual Payment”, “Browser Refresh Error”, “Customer Submitted Twice”, “Multiple Payment Methods Active”, “System Glitch”], “Not Working”: [”Auto-Pay Disabled”, “Bank Account Closed”, “Insufficient Funds”, “Payment Method Expired”, “System Configuration Error”], “Setup Failed”: [”Account Not Eligible”, “Bank Verification Failed”, “Incompatible Payment Type”, “Invalid Account Information”, “Portal Technical Issue”], “Cannot Disable”: [”Balance Owed”, “Contractual Requirement”, “Pending Transaction”, “Portal Access Issue”, “System Processing Lag”], “Application Denied”: [”Account Not Eligible”, “Balance Too Low”, “Credit Check Failed”, “Existing Plan Active”, “Previous Default History”], “Missed Payment”: [”Auto-Pay Not Set Up”, “Customer Forgot”, “Financial Hardship”, “Payment Date Confusion”, “Payment Method Failed”], “Early Payoff Request”: [”Approved With Fee”, “Approved Without Fee”, “Denied Per Terms”, “Requires Balance Verification”, “Under Review”], “Not Received”: [”Check Lost in Mail”, “Processing Time Not Elapsed”, “Refund Method Changed”, “System Error”, “Wrong Bank Account”], “Incorrect Amount”: [”Calculation Error”, “Credits Applied First”, “Fees Deducted”, “Partial Refund Per Policy”, “Tax Adjustment”], “Request Denied”: [”Contractual Terms”, “Outside Refund Window”, “Previous Refund Given”, “Promotional Restriction”, “Service Already Used”]}

Return ONLY valid JSON (no markdown): {”level_2”: “X”, “level_3”: “Y”, “level_4”: “Z”}Now, instead of asking the LLM to consider 900 paths, we’re saying, “Hey, you already decided this is a ‘Network & Connectivity’ issue. Here are the valid paths within that domain. Pick one.” We’ve gone from a 900-class problem to a ~75-class problem. Our accuracy, precision, and recall will benefit.

Now We Have Our Hierarchical Classifications!

With this approach, you get:

Improved accuracy by reducing overall cognitive load on the LLM

Lower token costs by only including relevant options in each prompt

Valid hierarchies that make sense together

Easier debugging and iteration when you evaluate Level 1 accuracy independently

Flexibility to add new categories without rewriting your entire classification logic

Wrapping Up

AI_QUERY in Databricks makes it remarkably easy to leverage LLMs for complex classification tasks without managing API calls, rate limits, or infrastructure. By combining it with a hierarchically-aware approach, you can tackle complex classification problems. Break down the complexity, give your LLM manageable choices at each step, and let the structure of your domain guide the way.